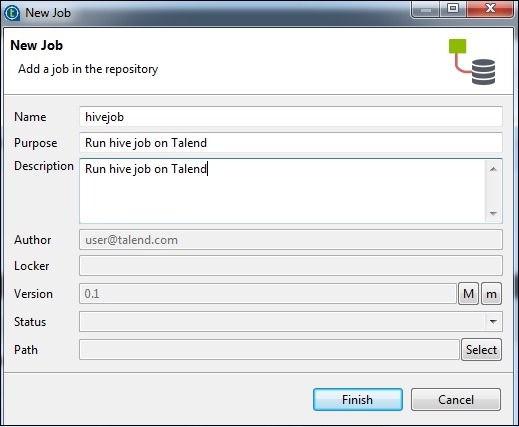

Creating a Talend Hive Job

As an example, we will load NYSE data to a hive table and run a basic hive query. Right click on Job Design and create a new job – hivejob. Mention the details of the job and click on Finish.

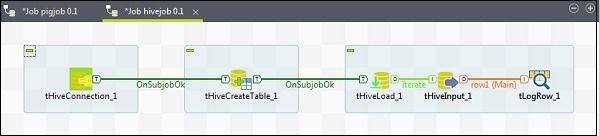

Adding Components to Hive Job

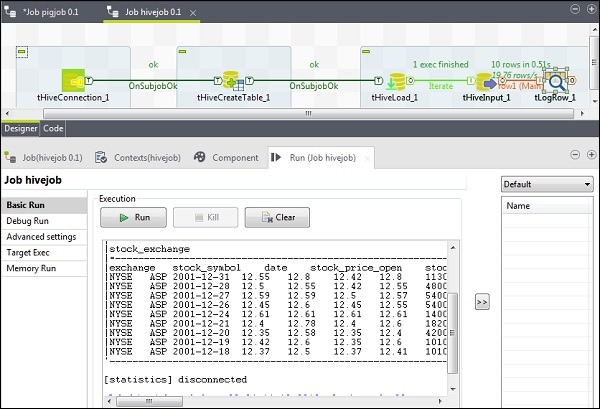

To ass components to a Hive job, drag and drop five talend components − tHiveConnection, tHiveCreateTable, tHiveLoad, tHiveInput and tLogRow from the pallet to designer window. Then, right click tHiveConnection and create OnSubjobOk trigger to tHiveCreateTable. Now, right click tHiveCreateTable and create OnSubjobOk trigger to tHiveLoad. Right click tHiveLoad and create iterate trigger on tHiveInput. Finally, right click tHiveInput and create a main line to tLogRow.

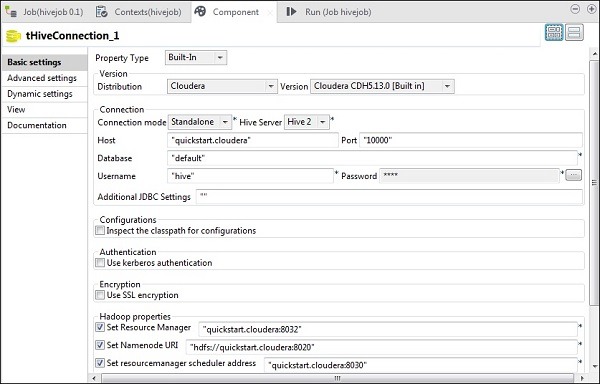

Configuring Components and Transformations

In tHiveConnection, select distribution as cloudera and its version you are using. Note that connection mode will be standalone and Hive Service will be Hive 2. Also check if the following parameters are set accordingly −

- Host: “quickstart.cloudera”

- Port: “10000”

- Database: “default”

- Username: “hive”

Note that password will be auto-filled, you need not edit it. Also other Hadoop properties will be preset and set by default.

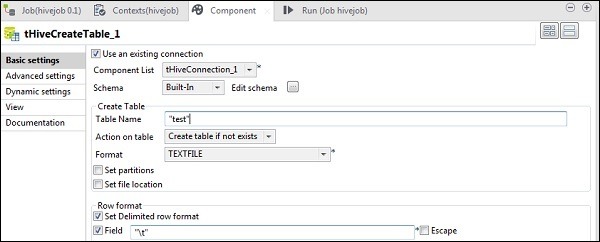

In tHiveCreateTable, select Use an existing connection and put tHiveConnection in Component list. Give the Table Name which you want to create in default database. Keep the other parameters as shown below.

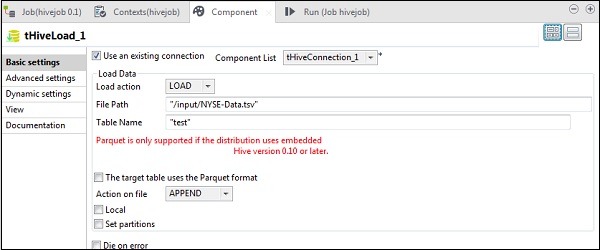

In tHiveLoad, select “Use an existing connection” and put tHiveConnection in component list. Select LOAD in Load action. In File Path, give the HDFS path of your NYSE input file. Mention the table in Table Name, in which you want to load the input. Keep the other parameters as shown below.

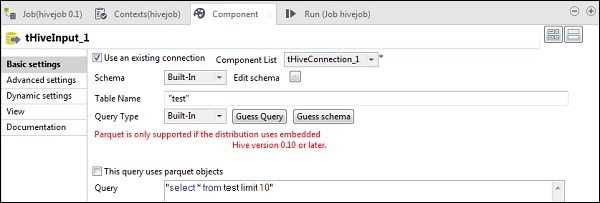

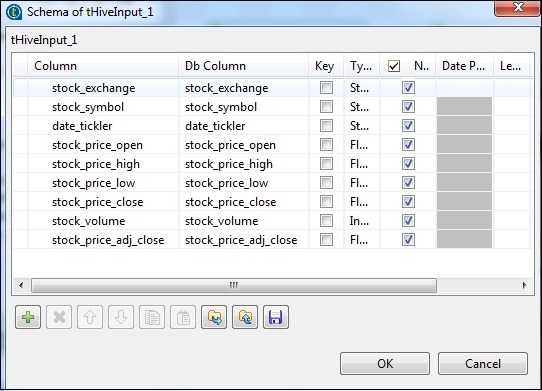

In tHiveInput, select Use an existing connection and put tHiveConnection in Component list. Click edit schema, add the columns and its type as shown in schema snapshot below. Now give the table name which you created in tHiveCreateTable.

Put your query in query option which you want to run on the Hive table. Here we are printing all the columns of first 10 rows in the test hive table.

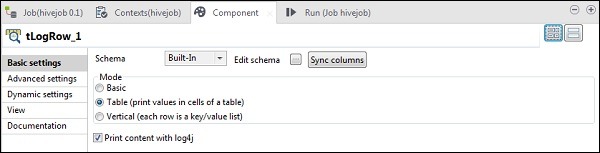

In tLogRow, click sync columns and select Table mode for showing the output.

Executing the Hive Job

Click on Run to begin the execution. If all the connection and the parameters were set correctly, you will see the output of your query as shown below.