Introduction

Talend Open Studio – Big Data is a free and open source tool for processing your data very easily on a big data environment. You have plenty of big data components available in Talend Open Studio , that lets you create and run Hadoop jobs just by simple drag and drop of few Hadoop components.

Besides, we do not need to write big lines of MapReduce codes; Talend Open Studio Big data helps you do this with the components present in it. It automatically generates MapReduce code for you, you just need to drag and drop the components and configure few parameters.

It also gives you the option to connect with several Big Data distributions like Cloudera, HortonWorks, MapR, Amazon EMR and even Apache.

Talend Components for Big Data

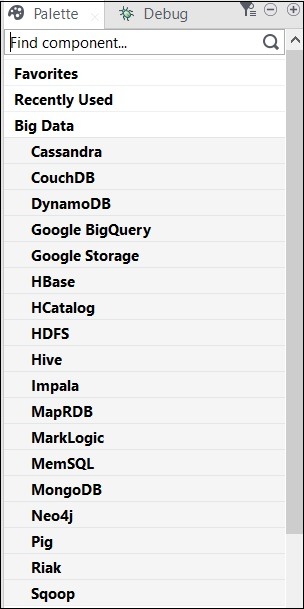

The list of categories with components to run a job on Big Data environment included under Big Data, is shown below −

The list of Big Data connectors and components in Talend Open Studio is shown below −

- tHDFSConnection − Used for connecting to HDFS (Hadoop Distributed File System).

- tHDFSInput − Reads the data from given hdfs path, puts it into talend schema and then passes it to the next component in the job.

- tHDFSList − Retrieves all the files and folders in the given hdfs path.

- tHDFSPut − Copies file/folder from local file system (user-defined) to hdfs at the given path.

- tHDFSGet − Copies file/folder from hdfs to local file system (user-defined) at the given path.

- tHDFSDelete − Deletes the file from HDFS

- tHDFSExist − Checks whether a file is present on HDFS or not.

- tHDFSOutput − Writes data flows on HDFS.

- tCassandraConnection − Opens the connection to Cassandra server.

- tCassandraRow − Runs CQL (Cassandra query language) queries on the specified database.

- tHBaseConnection − Opens the connection to HBase Database.

- tHBaseInput − reads data from HBase database.

- tHiveConnection − Opens the connection to Hive database.

- tHiveCreateTable − Creates a table inside a hive database.

- tHiveInput − Reads data from hive database.

- tHiveLoad − Writes data to hive table or a specified directory.

- tHiveRow − runs HiveQL queries on the specified database.

- tPigLoad − Loads input data to output stream.

- tPigMap − Used for transforming and routing the data in a pig process.

- tPigJoin − Performs join operation of 2 files based on join keys.

- tPigCoGroup − Groups and aggregates the data coming from multiple inputs.

- tPigSort − Sorts the given data based on one or more defined sort keys.

- tPigStoreResult − Stores the result from pig operation at a defined storage space.

- tPigFilterRow − Filters the specified columns in order to split the data based on the given condition.

- tPigDistinct − Removes the duplicate tuples from the relation.

- tSqoopImport − Transfers data from relational database like MySQL, Oracle DB to HDFS.

- tSqoopExport − Transfers data from HDFS to relational database like MySQL, Oracle DB