Amazon S3 (Simple Storage Services) is a scalable cloud storage service from Amazon Web Services (AWS) used by many customers in the world. The basic unit of data storage in Amazon S3 is a bucket. You can create multiple buckets in Amazon S3 cloud storage and configure permissions for users who need to access the buckets. By default, users can access data stored in Amazon S3 buckets by using the AWS web interface.

However, a user may need to access a bucket in Amazon S3 cloud by using the interface of an operating system such as Linux or Windows. Access to Amazon S3 cloud storage from the command line of an operating system is useful for working in operating systems that don’t have a graphical user interface (GUI), in particular virtual machines running in the public cloud. It is also useful for automating tasks such as copying files or making data backups. This blog post explains how to mount Amazon S3 cloud storage to a local directory on a Linux, Windows, and macOS machine to allow using Amazon S3 for file sharing without a web browser.

Mounting Amazon S3 Cloud Storage in Linux

AWS provides an API to work with Amazon S3 buckets using third-party applications. You can even write your own application that can interact with S3 buckets by using the Amazon API. You can create an application that uses the same path for uploading files to Amazon S3 cloud storage and provide the same path on each computer by mounting the S3 bucket to the same directory with S3FS. In this tutorial we use S3FS to mount an Amazon S3 bucket as a disk drive to a Linux directory.

S3FS, a special solution based on FUSE (file system in user space), was developed to mount S3 buckets to directories of Linux operating systems similarly to the way you mount CIFS or NFS share as a network drive. S3FS is a free and open source solution. After mounting Amazon S3 cloud storage with S3FS to your Linux machine, you can use cp, mv, rm, and other commands in the Linux console to operate with files as you do when working with mounted local or network drives. S3FS is written on Python and you can familiarize yourself with the source code on GitHub.

Let’s find out how to mount an Amazon S3 bucket to a Linux directory with Ubuntu 18.04 LTS as an example. A fresh installation of Ubuntu is used in this walkthrough.

Update the repository tree:

sudo apt-get update

If any existing FUSE is installed on your Linux system, remove that FUSE before configuring the environment and installing fuse-f3fs to avoid conflicts. As we’re using a fresh installation of Ubuntu, we don’t run the sudo apt-get remove fuse command to remove FUSE.

Install s3fs from online software repositories:

sudo apt-get install s3fs

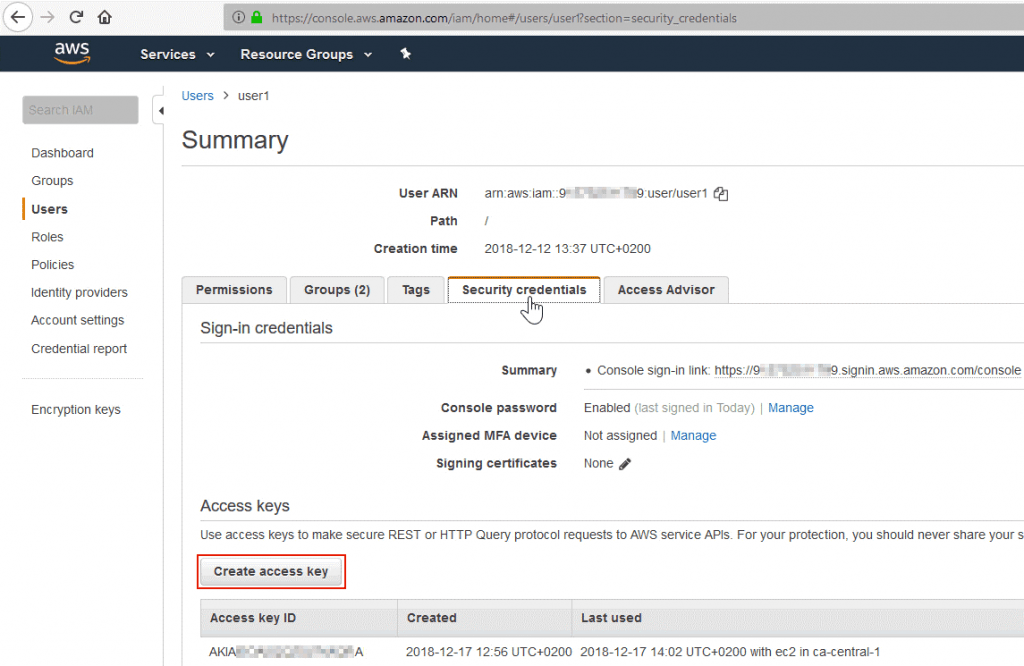

You need to generate the access key ID and secret access key in the AWS web interface for your account (IAM user). The IAM user must have S3 full access. You can use this link:

https://console.aws.amazon.com/iam/home?#/security_credentials

Note: It is recommended to mount Amazon S3 buckets as a regular user with restricted permissions and use users with administrative permissions only for generating keys.

These keys are needed for AWS API access. You must have administrative permissions to generate the AWS access key ID and AWS secret access key. If you don’t have enough permissions, ask your system administrator to generate the AWS keys for you. The administrator can generate the AWS keys for a user account in the Users section of the AWS console in the Security credentials tab by clicking the Create access key button.

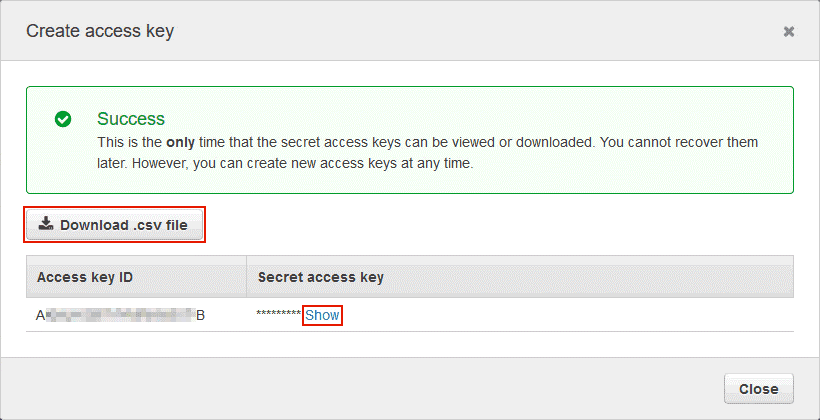

In the Create access key popup window click Download .csv file or click Show under the Secret access key row name. This is the only case when you can see the secret access key in the AWS web interface. Store the AWS access key ID and secret access key in a safe place.

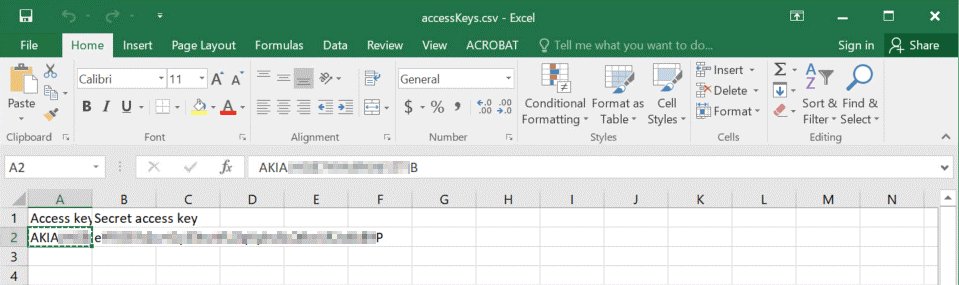

You can open the downloaded CSV file that contains access keys in Microsoft Office 365 Excel, for example.

Go back to the Ubuntu console to create a configuration file for storing the AWS access key and secret access key needed to mount an S3 bucket with S3FS. The command to do this is:

echo ACCESS_KEY:SECRET_ACCESS_KEY > PATH_TO_FILE

Change ACCESS_KEY to your AWS access key and SECRET_ACCESS_KEY to your secret access key.

In this example, we will store the configuration file with the AWS keys in the home directory of our user. Make sure that you store the file with the keys in a safe place that is not accessible by unauthorized persons.

echo AKIA4SK3HPQ9FLWO8AMB:esrhLH4m1Da+3fJoU5xet1/ivsZ+Pay73BcSnzP > ~/.passwd-s3fs

Check whether the keys were written to the file:

cat ~/.passwd-s3fs

Set correct permissions for the passwd-s3fs file where the access keys are stored:

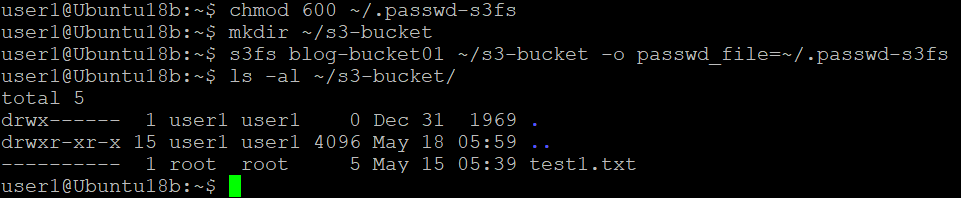

chmod 600 ~/.passwd-s3fs

Create the directory (mount point) that will be used as a mount point for your S3 bucket. In this example, we create the Amazon cloud drive S3 directory in the home user’s directory.

mkdir ~/s3-bucket

You can also use an existing empty directory.

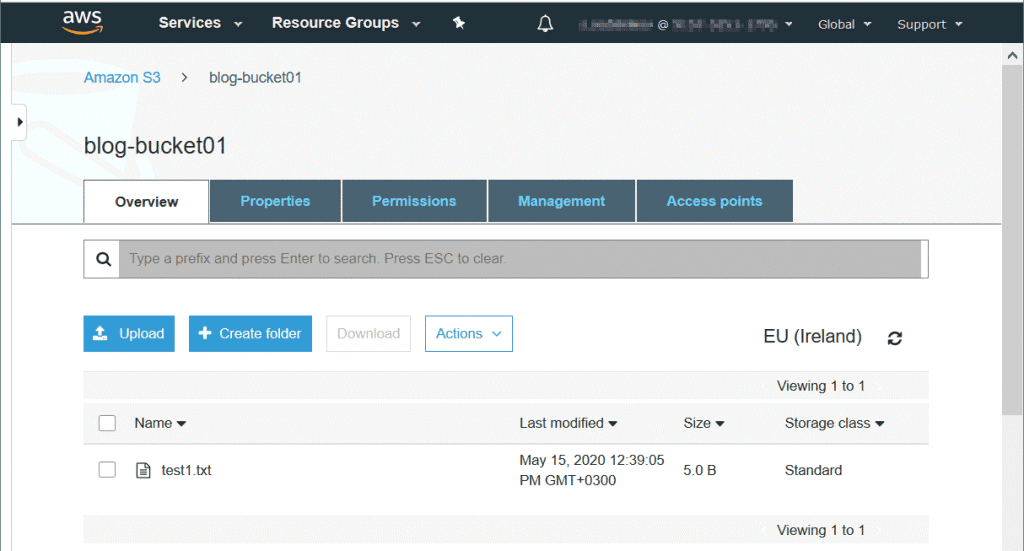

The name of the bucket used in this walkthrough is blog-bucket01. The text1.txt file is uploaded to our blog-bucket01 in Amazon S3 before mounting the bucket to a Linux directory. It is not recommended to use a dot (.) in bucket names.

Let’s mount the bucket. Use the following command to set the bucket name, the path to the directory used as the mount point and the file that contains the AWS access key and secret access key.

s3fs bucket-name /path/to/mountpoint -o passwd_file=/path/passwd-s3fs

In our case, the command we use to mount our bucket is:

s3fs blog-bucket01 ~/s3-bucket -o passwd_file=~/.passwd-s3fs

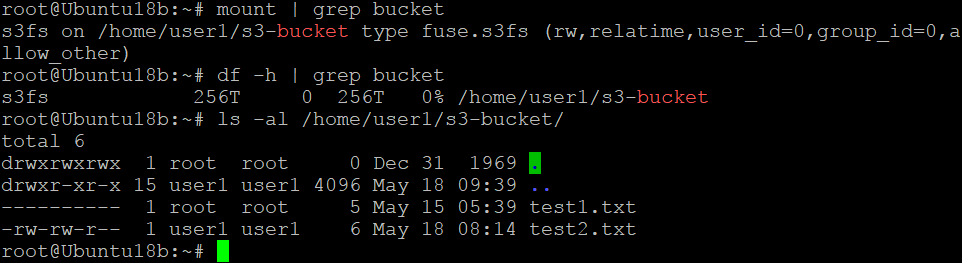

The bucket is mounted. We can run the commands to check whether our bucket (blog-bucket-01) has been mounted to the s3-bucket directory:

mount | grep bucket

df -h | grep bucket

Let’s check the contents of the directory to which the bucket has been mounted:

ls -al ~/s3-bucket

As you can see on the screenshot below, the test1.txt file uploaded via the web interface before is present and displayed in the console output.

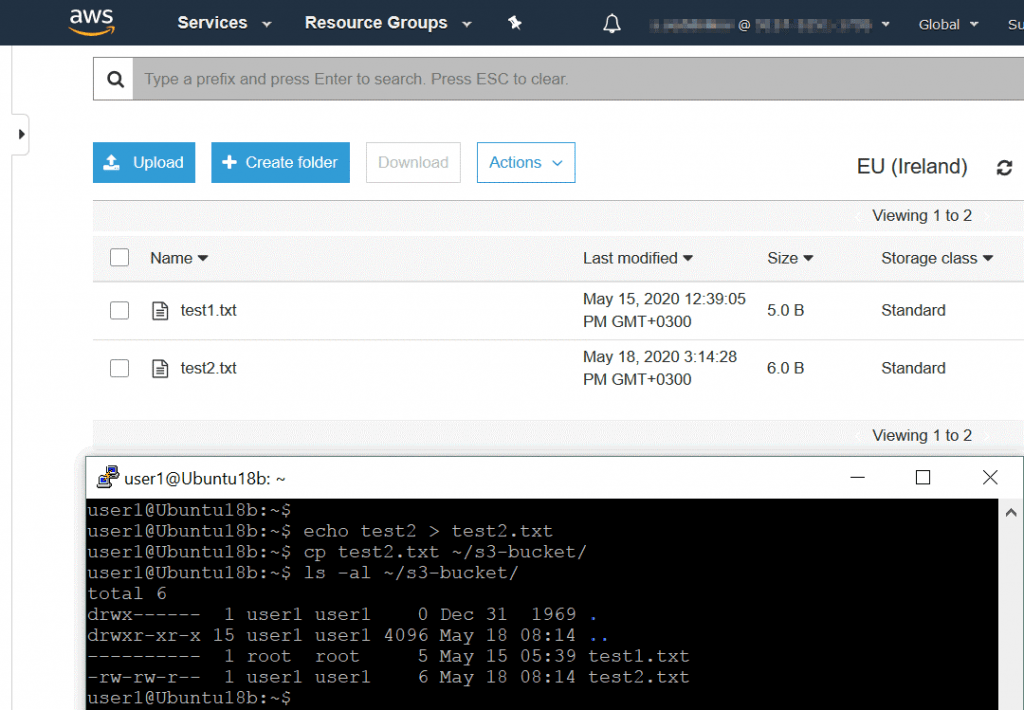

Now you can try to create a new file on your hard disk drive and copy that file to the S3 bucket in your Linux console.

echo test2 > test2.txt

cp test2.txt ~/s3-bucket/

Update the AWS web page where your files in the bucket are displayed. You should see the new test2.txt file copied to the S3 bucket in the Linux console by using the directory to which the bucket is mounted.

How to mount an S3 bucket on Linux boot automatically

If you want to configure automatic mount of an S3 bucket with S3FS on your Linux machine, you have to create the passwd-s3fs file in /etc/passwd-s3fs, which is the standard location. After creating this file, you don’t need to use the -o passwd_file key to set the location of the file with your AWS keys manually.

Create the /etc/passwd-s3fs file:

vim /etc/passwd-s3fs

Note: If vim the text editor has not been installed yet in your Linux, run the apt-get install vim command.

Enter your AWS access key and secret access key as explained above.

AKIA4SK3HPQ9FLWO8AMB:esrhLH4m1Da+3fJoU5xet1/ivsZ+Pay73BcSnzcP

As an alternative you can store the keys in the /etc/passwd-s3fs file with the command:

echo AKIA4SK3HPQ9FLWO8AMB:esrhLH4m1Da+3fJoU5xet1/ivsZ+Pay73BcSnzcP > /etc/passwd-s3fs

Set the required permissions for the /etc/passwd-s3fs file:

chmod 640 /etc/passwd-s3fs

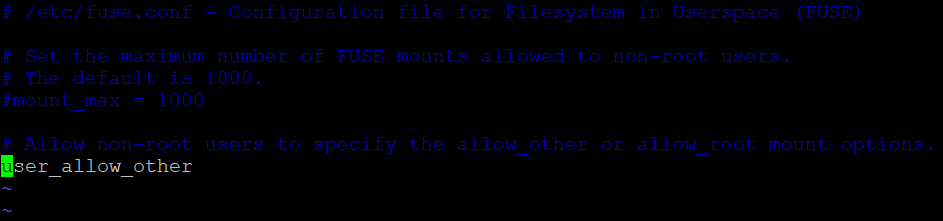

Edit the FUSE configuration file:

/etc/fuse.conf

Uncomment the user_allow_other string if you want to allow using Amazon S3 for file sharing by other users (non-root users) on your Linux machine.

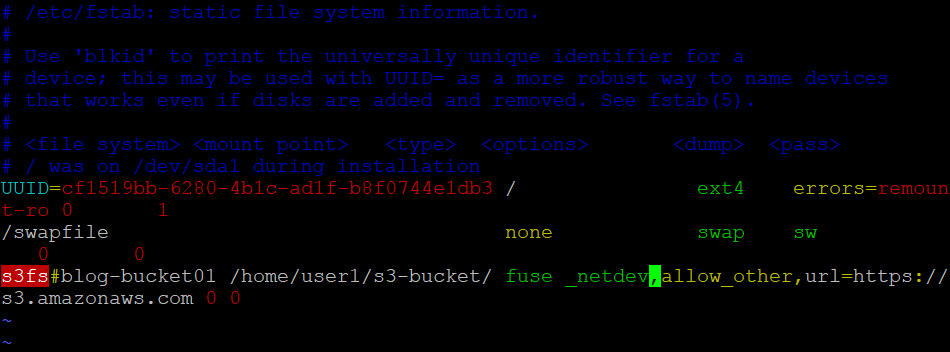

Open /etc/fstab with a text editor:

vim /etc/fstab

Add the line in the end of the file:

s3fs#blog-bucket01 /home/user1/s3-bucket/ fuse _netdev,allow_other,url=https://s3.amazonaws.com 0 0

Save the edited /etc/fstab file and quit the text editor.

Note: If you want to set the owner and group, you can use the -o uid=1001 -o gid=1001 -o mp_umask=002 parameters (change the digital values of the user id, group id and umask according to your configuration). If you want to enable cache, use the -ouse_cache=/tmp parameter (set a custom directory instead of /tmp/ if needed). You can set the number of times to retry mounting a bucket if the bucket was not mounted initially by using the retries parameter. For example, retries=5 sets five tries.

Reboot the Ubuntu machine to check whether the S3 bucket is mounted automatically on system boot:

init 6

Wait until your Linux machine is booted.

You can run commands to check whether the AWS S3 bucket was mounted automatically to the s3-bucket directory son Ubuntu boot.

mount | grep bucket

df -h | grep bucket

ls -al /home/user1/s3-bucket/

In our case, the Amazon cloud drive S3 has been mounted automatically to the specified Linux directory on Ubuntu boot (see the screenshot below). The configuration was applied successfully.

S3FS also supports working with rsync and file caching to reduce traffic.

Mounting S3 as a File System in macOS

You can mount Amazon S3 cloud storage to macOS as you would mount an S3 bucket in Linux. You should install S3FS on macOS and set permissions and Amazon keys. In this example, macOS 10.15 Catalina is used. The name of the S3 bucket is blog-bucket01, the macOS user name is user1, and the directory used as a mount point for the bucket is /Volumes/s3-bucket/. Let’s look at configuration step by step.

Install homebrew, which is a package manager for macOS used to install applications from online software repositories:

/bin/bash -c “$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install.sh)”

Install osxfuse:

brew cask install osxfuse

Reboot the system:

sudo shutdown -r now

Install S3FS:

brew install s3fs

Once S3FS is installed, set the access key and secret access key for your Amazon S3 bucket. You can define keys for the current session if you need to mount the bucket for one time or you are going to mount the bucket infrequently:

export AWSACCESSKEYID=AKIA4SK3HPQ9FLWO8AMB

export AWSSECRETACCESSKEY=esrhLH4m1Da+3fJoU5xet1/ivsZ+Pay73BcSnzP

If you are going to use a mounted bucket regularly, set your AWS keys in the configuration file used by S3FS for your macOS user account:

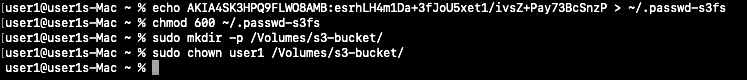

echo AKIA4SK3HPQ9FLWO8AMB:esrhLH4m1Da+3fJoU5xet1/ivsZ+Pay73BcSnzP > ~/.passwd-s3fs

If you have multiple buckets and keys to access the buckets, define them in the format:

echo bucket-name:access-key:secret-key > ~/.passwd-s3fs

Set the correct permissions to allow read and write access only for the owner:

chmod 600 ~/.passwd-s3fs

Create a directory to be used as a mount point for the Amazon S3 bucket:

sudo mkdir -p /Volumes/s3-bucket/

Your user account must be set as the owner for the created directory:

sudo chown user1 /Volumes/s3-bucket/

Mount the bucket with S3FS:

s3fs blog-bucket01 /Volumes/s3-bucket/

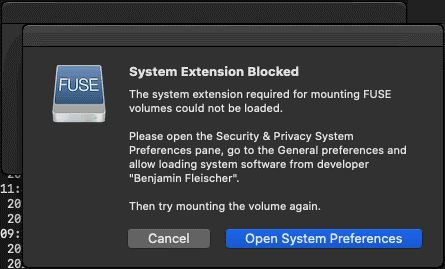

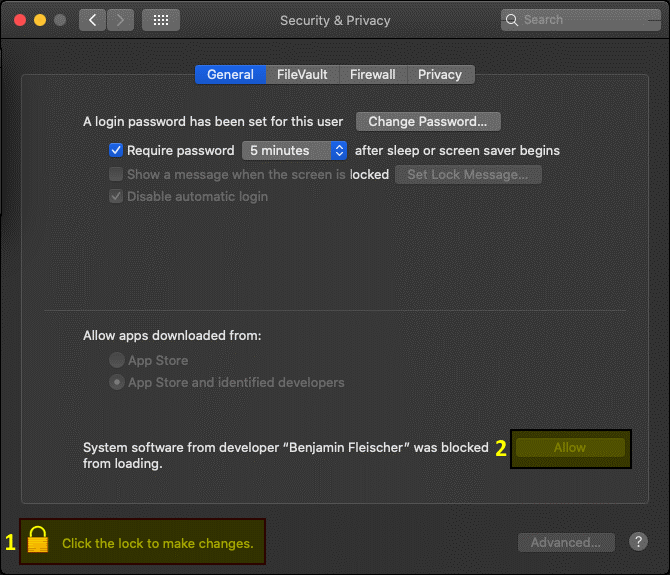

The macOS security warning is displayed in the dialog window. Click Open System Preferences to allow the S3FS application and related connections.

In the Security & Privacy window click the lock to make changes and then hit the Allow button.

Run the mounting command once again:

s3fs blog-bucket01 /Volumes/s3-bucket/

A popup warning message is displayed: Terminal would like to access files on a network volume. Click OK to allow access.

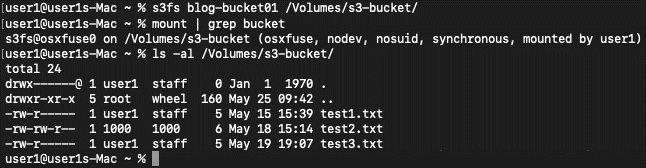

Check whether the bucket has been mounted:

mount | grep bucket

Check the contents of the bucket:

ls -al /Volumes/s3-bucket/

The bucket is mounted successfully. You can view, copy and delete files in the bucket.

You can try to configure mounting an S3 bucket on user login with launchd.

Mounting Amazon S3 Cloud Storage in Windows

You can try wins3fs, which is a solution equivalent to S3FS for mounting Amazon S3 cloud storage as a network disk in Windows. However, in this section we are going to use rclone. Rclone is a command line tool that can be used to mount and synchronize cloud storage such as Amazon S3 buckets, Google Cloud Storage, Google Drive, Microsoft OneDrive, DropBox, and so on.

Rclone is a free opensource tool that can be downloaded from the official website and from GitHub. You can download rclone by using one of these links:

https://github.com/rclone/rclone/releases/tag/v1.51.0

Let’s use the direct link from the official website:

https://downloads.rclone.org/v1.51.0/rclone-v1.51.0-windows-amd64.zip

The following actions are performed in the command line interface and may be useful for users who use Windows without a GUI on servers or VMs.

Open Windows PowerShell as Administrator.

Create the directory to download and store rclone files:

mkdir c:\rclone

Go to the created directory:

cd c:\rclone

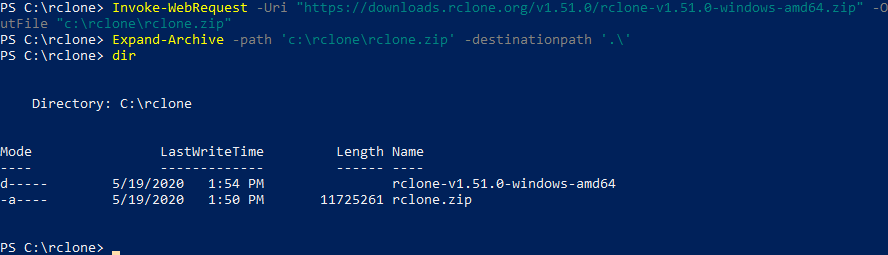

Download rclone by using the direct link mentioned above:

Invoke-WebRequest -Uri “https://downloads.rclone.org/v1.51.0/rclone-v1.51.0-windows-amd64.zip” -OutFile “c:\rclone\rclone.zip”

Extract files from the downloaded archive:

Expand-Archive -path ‘c:\rclone\rclone.zip’ -destinationpath ‘.\’

Check the contents of the directory:

dir

The files are extracted to C:\rclone\rclone-v1.51.0-windows-amd64 in this case.

Note: In this example, the name of the rclone directory after extracting files is rclone-v1.51.0-windows-amd64. However, it is not recommended to use dots (.) in directory names. You can rename the directory to rclone-v1-51-win64, for example.

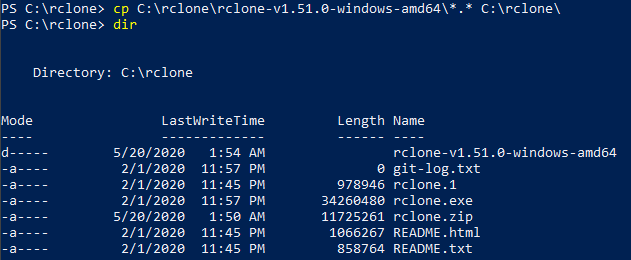

Let’s copy the extracted files to C:\rclone\ to avoid dots in the directory name:

cp C:\rclone\rclone-v1.51.0-windows-amd64\*.* C:\rclone\

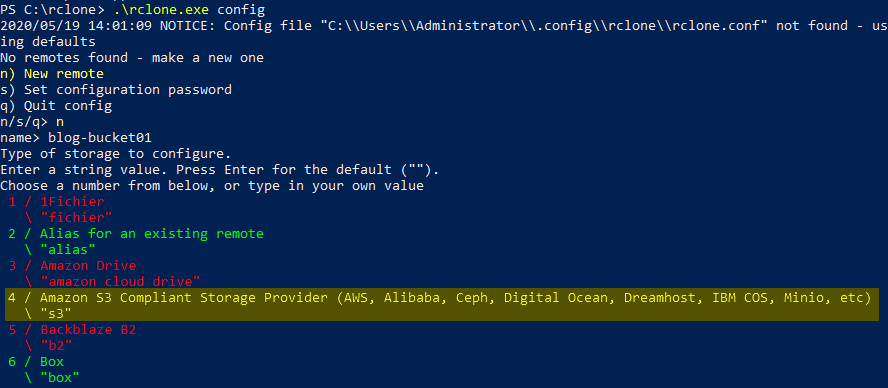

Run rclone in the configuring mode:

.\rclone.exe config

The configurator is working as a wizard in the command line mode. You have to select the needed parameters at each step of the wizard.

Type n and press Enter to select the New remote option.

n/s/q> n

Enter the name of your S3 bucket:

name> blog-bucket01

After entering the name, select the type of cloud storage to configure. Type 4 to select Amazon S3 cloud storage.

Storage> 4

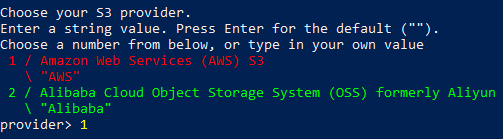

Choose your S3 provider. Type 1 to select Amazon Web Services S3.

provider> 1

Get AWS credentials from runtime (true or false). 1 (false) is used by default. Press Enter without typing anything to use the default value.

env_auth> 1

Enter your AWS access key:

access_key_id> AKIA4SK3HPQ9FLWO8AMB

Enter your secret access key:

secret_access_key> esrhLH4m1Da+3fJoU5xet1/ivsZ+Pay73BcSnzcP

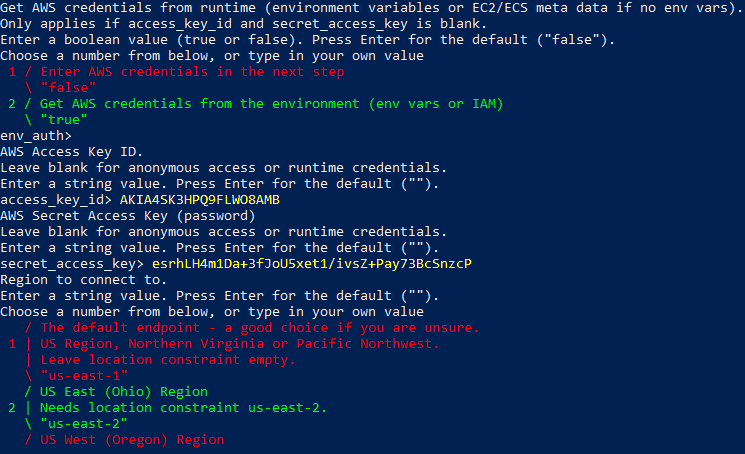

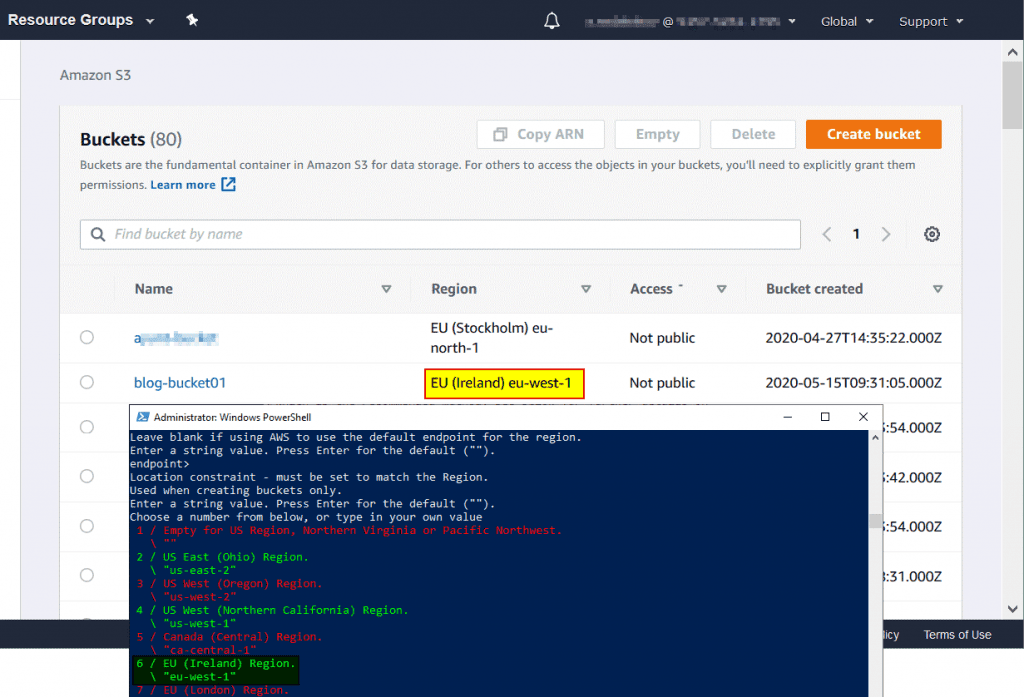

Region to connect to. EU (Ireland) eu-west-1 is used for our bucket in this example and we should type 6.

region> 6

Endpoint for S3 API. Leave blank if using AWS to use the default endpoint for the region. Press Enter.

Endpoint>

Location constraint must be set to match the Region. Type 6 to select the EU (Ireland) Region \ “eu-west-1”.

location_constraint> 6

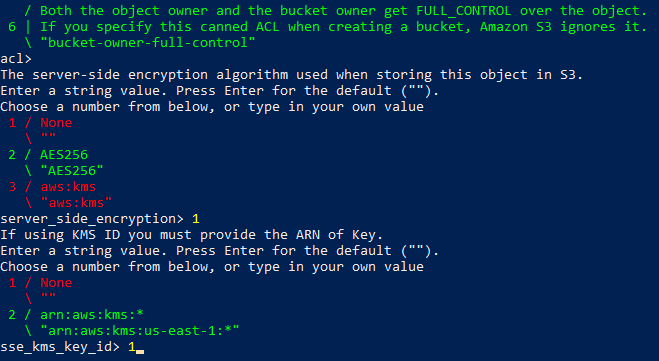

Canned ACL used when creating buckets and storing or copying objects. Press Enter to use the default parameters.

acl>

Specify the server-side encryption algorithm used when storing this object in S3. In our case encryption is disabled, and we have to type 1 (None).

server_side_encryption> 1

If using KMS ID, you must provide the ARN of Key. As encryption is not used, type 1 (None).

sse_kms_key_id> 1

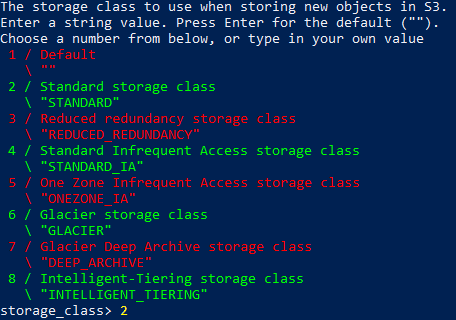

Select the storage class to use when storing new objects in S3. Enter a string value. The standard storage class option (2) is suitable in our case.

storage_class> 2

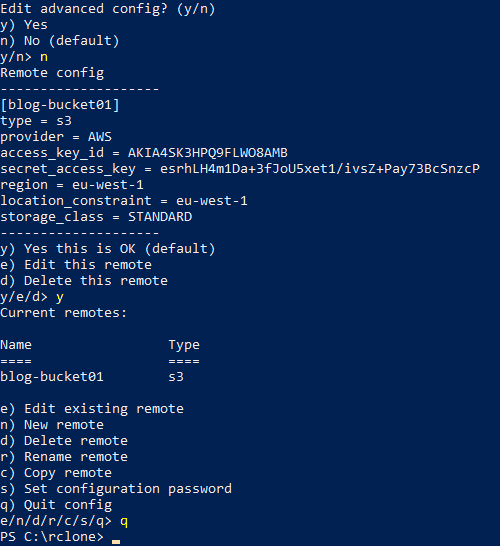

Edit advanced config? (y/n)

y/n> n

Check your configuration and type y (yes) if everything is correct.

t/e/d> y

Type q to quit the configuration wizard.

e/n/d/r/c/s/q> q

Rclone is now configured to work with Amazon S3 cloud storage. Make sure you have the correct date and time settings on your Windows machine. Otherwise an error can occur when mounting an S3 bucket as a network drive to your Windows machine: Time may be set wrong. The difference between the request time and the current time is too large.

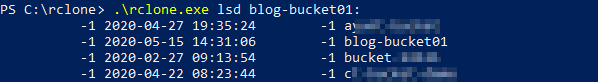

Run rclone in the directory where rclone.exe is located and list buckets available for your AWS account:

.\rclone.exe lsd blog-bucket01:

You can enter c:\rclone to the Path environment variable. It allows you to run rclone from any directory without switching to the directory where rclone.exe is stored.

As you can see on the screenshot above, access to Amazon S3 cloud storage is configured correctly and a list of buckets is displayed (including the blog-bucket01 that is used in this tutorial).

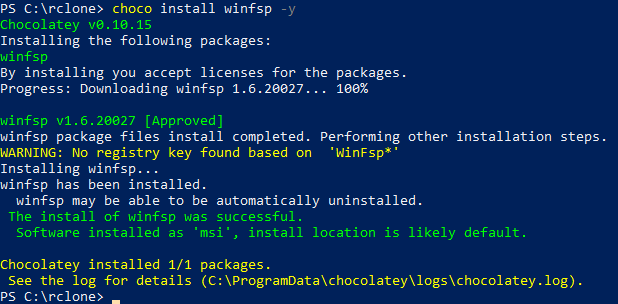

Install Chocolately, which is a Windows package manager that can be used to install applications from online repositories:

Set-ExecutionPolicy Bypass -Scope Process -Force; `

iex ((New-Object System.Net.WebClient).DownloadString(‘https://chocolatey.org/install.ps1’))

WinFSP (Windows File System Proxy) is the Windows analog of the Linux FUSE and it is fast, stable and allows you to create user mode file systems.

Install WinFSP from Chocolatey repositories:

choco install winfsp -y

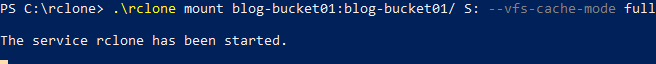

Now you can mount your Amazon S3 bucket to your Windows system as a network drive. Let’s mount the blog-bucket01 as S:

.\rclone mount blog-bucket01:blog-bucket01/ S: –vfs-cache-mode full

Where the first “blog-bucket” is the bucket name entered in the first step of the rclone configuration wizard and the second “blog-bucket” that is defined after “:” is the Amazon S3 bucket name set in the AWS web interface.

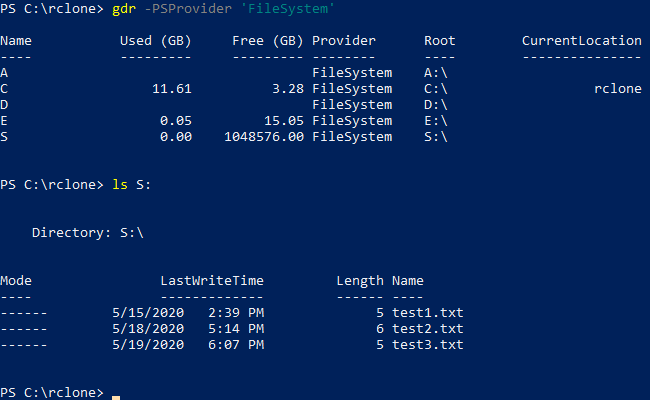

List all connected disks and partitions:

gdr -PSProvider ‘FileSystem’

Check the content of the mapped network drive:

ls S:

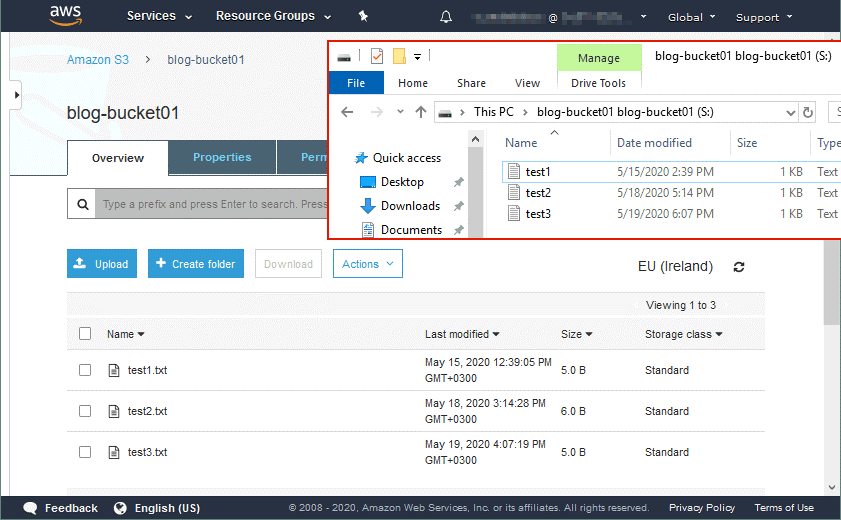

The S3 bucket is now mounted as a network drive (S:). You can see three txt files stored in the blog-bucket01 in Amazon S3 cloud storage by using another instance of Windows PowerShell or Windows command line.

If your Windows has a graphical user interface, you can use that interface to download and upload files to your Amazon S3 cloud storage. If you copy a file by using a Windows interface (a graphical user interface or command line interface), data will be synchronized in a moment and you will see a new file in both the Windows interface and AWS web interface.

If you press Ctrl+C or close the CMD or PowerShell window where rclone is running (“The service clone has been started” is displayed in that CMD or PowerShell instance), your Amazon S3 bucket will be disconnected from the mount point (S: in this case).

How to automate mounting an S3 bucket on Windows boot

It is convenient when the bucket is mounted as a network drive automatically on Windows boot. Let’s find out how to configure automatic mounting of the S3 bucket in Windows.

Create the rclone-S3.cmd file in the C:\rclone\ directory.

Add the string to the rclone-S3.cmd file:

C:\rclone\rclone.exe mount blog-bucket01:blog-bucket01/ S: –vfs-cache-mode full

Save the CMD file. You can run this CMD file instead of typing the command to mount the S3 bucket manually.

Copy the rclone-S3.cmd file to the startup folder for all users:

C:\ProgramData\Microsoft\Windows\Start Menu\Programs\StartUp

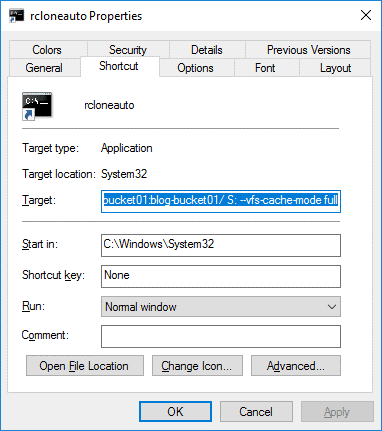

As an alternative, you can create a shortcut to C:\Windows\System32\cmd.exe and set arguments needed to mount an S3 bucket in the target properties:

C:\Windows\System32\cmd.exe /k cd c:\rclone & rclone mount blog-bucket01:blog-bucket01/ S: –vfs-cache-mode full

Then add the edited shortcut to the Windows startup folder:

C:\ProgramData\Microsoft\Windows\Start Menu\Programs\StartUp

There is a small disadvantage – a command line window with the “The service rclone has been started” message is displayed after attaching an S3 bucket to your Windows machine as a network drive. You can try to configure automatic mounting of the S3 bucket by using Windows scheduler or NSSM, which is a free tool to create and configure Windows services and their automatic startup.

Conclusion

When you know how to mount Amazon S3 cloud storage as a file system to the most popular operating systems, sharing files with Amazon S3 becomes more convenient. An Amazon S3 bucket can be mounted by using S3FS in Linux, macOS and by using rclone or wins3fs in Windows. Automating the process of copying data to Amazon S3 buckets after mounting the buckets to local directories of your operating system is more convenient compared to using the web interface. You can copy your data to Amazon S3 for making a backup by using the interface of your operating system.

thank you!